[AINews] GraphRAG: The Marriage of Knowledge Graphs and RAG • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Innovative Projects and Discussions in Various AI Discords

Using MultiQueryRetrieval to Trigger Fallbacks and Fixes

Model Announcements

HuggingFace Announcements

HuggingFace Discussion on reading-group

Unsloth AI - Showcasing Discord LLM Frontend

Discord Community Collaborations

Apple Technologies and Models

Mojo Promising Applications and Constrained Inference

LlamaIndex Discord Conversations

Torchtune General Chat

Research Messages and Model Evaluations

Buttondown: The Easiest Way to Start and Grow Your Newsletter

AI Twitter Recap

AI Twitter Recap

-

LLM Model Releases and Improvements

- Gemma 2 models released: @rasbt noted Gemma 2 models explore techniques without increasing dataset sizes, focusing on developing small & efficient LLMs. Key design choices include sliding window attention, group-query attention, and RMS norm. Gemma 2 is almost as good as the 3x larger Llama 3 70B.

- Anthropic's Claude 3.5 Sonnet model: @alexandr_wang reported Claude 3.5 Sonnet is now #1 on Instruction Following and Coding in ScaleAI's hidden evals. However, it loses points on writing style and formatting vs other top models.

- Nvidia's Nemotron 340B model: @osanseviero shared that Nemotron, a 340B parameter model, was released as part of the June open model releases.

- Qwen2-72B tops HuggingFace Open LLM Leaderboard: @rohanpaul_ai noted Qwen2-72B scores 43.02 on average, excelling in math, long-range reasoning, and knowledge. Interestingly, Llama-3-70B-Instruct loses 15 points to its pretrained version on GPQA.

-

Retrieval Augmented Generation (RAG) Techniques and Challenges

- RAG Basics Talk: @HamelHusain shared a talk on RAG Basics from the LLM conf, covering key concepts and techniques.

- Limitations of RAG: @svpino discussed the limitations of RAG, including challenges with retrieval, long context windows, evaluation, and configuring systems to provide sources for answers.

- Improving LLM Context Usage in RAG: @AAAzzam shared a tip to get LLMs to use context more effectively - LLMs use info from function calls far more than general context. Transforming context into pseudo function calls can improve results.

-

Synthetic Data Generation and Usage

- Persona-Driven Data Synthesis: @omarsar0 shared a paper proposing a persona-driven data synthesis methodology to generate diverse synthetic data. It introduces 1 billion diverse personas to facilitate creating data covering a wide range of perspectives. A fine-tuned model on 1.07M synthesized math problems achieves 64.9% on MATH, matching GPT-4 performance at 7B scale.

- AutoMathText Dataset: @rohanpaul_ai highlighted the 200GB AutoMathText dataset of mathematical text and code for pretraining mathematical language models. The dataset consists of content from arXiv, OpenWebMath, and programming repositories/sites.

- Synthetic Data for Math Capabilities: @rohanpaul_ai noted a paper showing synthetic data is nearly as effective as real data for improving math capabilities in LLMs. LLaMA-2 7B models trained on synthetic data surpass previous models by 14-20% on GSM8K and MATH benchmarks.

-

Miscellaneous

- 8-bit Optimizers via Block-wise Quantization: @rohanpaul_ai revised the use of block-wise quantization to achieve 8-bit optimizers.

AI Discord Recap

The Discord platform hosts various discussions and updates related to AI advancements. Some highlights include new model releases like Phi-3 Mini and Gemma 2, benchmarking efforts with tools like lm_eval, and collaborations in open-source AI development. Additionally, there are discussions on hardware considerations, vision-language models like Vistral 7B, and AI applications in fashion and e-commerce. The community is exploring ways to optimize training and inference, quantization techniques, and the potential of specialized hardware. The Discord channels provide a platform for knowledge sharing and community engagement in the AI field.

Innovative Projects and Discussions in Various AI Discords

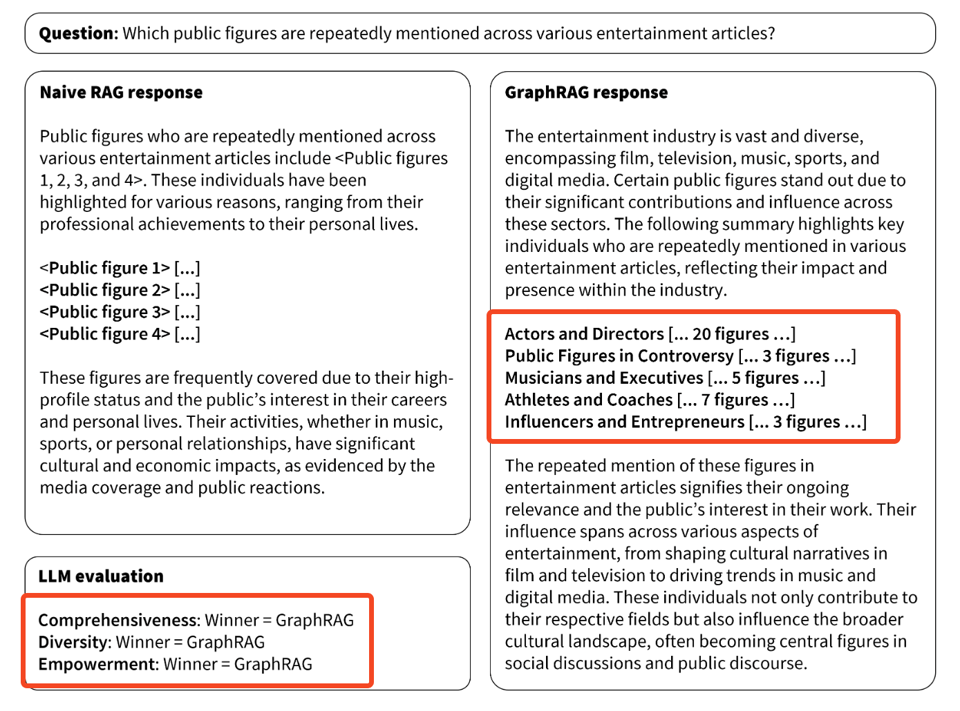

This section delves into a plethora of innovative projects, discussions, and breakthroughs across different AI-related Discord servers. From generating data from a billion personas to advancements in model checkpoints, discussions on synthetic data creation, performance-enhancing updates to models, and the challenges faced in running AI applications on systems with VRAM constraints. Additionally, insights are shared on creating anti-AI software to protect artist creations, model performance evaluations, the limitations and challenges of multimodal models, and the introduction of new architectures like Graph RAG Architecture. Community collaborations, troubleshooting efforts, and the quest for optimizing AI models are central themes across these Discord communities.

Using MultiQueryRetrieval to Trigger Fallbacks and Fixes

In this section, discussions revolve around using MultiQueryRetrieval and the potential fallbacks and fixes associated with it. The conversation extends to the implementation of sharded databases, particularly focusing on specifics regarding shard-query mapping. Additionally, insights are shared on serverless MongoDB Atlas. The section also delves into topics like API aspirations, file upload queries, and leveraging LangServe. LangChain chatbots are explored for scheduling demos and enhancing human connections using Agents and AgentDirector. Moreover, CriticGPT's advancements in refining GPT-4's outputs are highlighted, along with a discussion on RAFT methodology and collaboration opportunities for chatbots using LLM.

Model Announcements

Microsoft has updated Phi 3 mini to Phi 3.1, boasting vastly improved performance, updated instruction following, and enhanced output structuring. This update is available on lmstudio community where users emphasized the significance of the update. Gemma 2 models in lmstudio community have also been updated to include the latest changes, allowing users to safely redownload and use them with version 0.2.27. Links to the updated models, Gemma 2 9b it GGUF, and Gemma 2 27b it GGUF are provided.

HuggingFace Announcements

The HuggingFace Announcements section provides updates on the latest advancements in the field, including new models released in Transformers 4.42 such as Gemma 2, RT-DETR, and LLaVa-NeXT-Video. AWS has made available Chronos datasets, nearly 100k public models are utilizing Hub for tensorboard logs, Google introduced high-quality Gemma 2 LLMs, and Microsoft's Florence-2 can now run locally with WebGPU. Exciting developments such as the introduction of vision language models and a new RAG recipe using elastic search have also been highlighted.

HuggingFace Discussion on reading-group

Discussions in the HuggingFace reading-group channel revolved around new AI models and their applications. Members shared updates on the recent launch of the CriticGPT model by OpenAI, aimed at identifying errors in GPT-4 generated code. Additionally, the launch of the Embodied Agents Toolkit, which facilitates the integration of multimodal transformers into robotics, was announced. The toolkit includes support for the Gradio interface and HuggingFace datasets, with developers seeking feedback to enhance user experience. Other topics discussed included the Terminator architecture, advanced CNN resources, and prompting etiquette reminders within the HuggingFace community.

Unsloth AI - Showcasing Discord LLM Frontend

Discord as an LLM Frontend with llmcord.py: jakobdylanc introduced a new script called llmcord frontend, providing a platform for the community to interact with large language models directly on Discord. The script facilitates user engagement and feedback within the Discord environment, showcasing an innovative approach to leveraging language models for interactive communication.

Discord Community Collaborations

This section highlights various interactions and collaborations within the Discord community related to AI projects and tools. It includes community feedback on projects like llmcord.py, collaborative tutorial releases, requests for feedback on Colab notebooks, and discussions on new AI releases such as Gemma 2 by Google. Furthermore, it explores topics like scaling synthetic data creation, generalized knowledge distillation, and stability.ai's discussions on stable diffusion and anti-AI art software potential.

Apple Technologies and Models

Apple is using variable bit quantization in on-device large language models (LLM) to enhance their performance. This technique was detailed in their Talaria tool published in April 2024. The foundation models introduced at the Worldwide Developers Conference 2024 include a 3 billion parameter on-device LLM and a server-based model for Private Cloud Compute. LeopolisDream teased a new Terminator architecture without residuals, dot product attention, or normalization, with a detailed arXiv paper. Links mentioned include Tweets and Apple's research papers.

Mojo Promising Applications and Constrained Inference

This section discusses the potential applications of Mojo beyond just attention tweaking. It highlights areas like Model and simulator-based RL for LLM agents, symbolic reasoning, inference time search, and sub-symbolic model steering. An open question is raised regarding anyone working on these specific applications within Mojo. Additionally, there is a conversation about the potential of constrained inference methods like guidance, outlines, or sglan within Mojo, suggesting Mojo's robust functionality for exploring such methods.

LlamaIndex Discord Conversations

Discussions on LlamaIndex Discord channel revolved around various topics related to AI and technology. Users shared insights on translating Python systems into microservices, building superior knowledge assistants, and issues with building various tools like chatbots and agents. In another section, users discussed topics related to the Tinygrad project, including error handling, memory issues, and equivalent functionalities to torch.no_grad(). The LangChain AI channel highlighted discussions on building chatbots, Python code guidance, and collaborative opportunities. Additionally, conversations on Runway Gen 3, Sonnet + Artifacts, Figma AI, Microsoft's Phi-3 Mini updates, and Magic Dev's valuation were detailed in the Latent Space AI chat.

Torchtune General Chat

Hardware requirements for running llama.cpp, Release of llamafile v0.8.9, Testing mxbai-embed-large-v1 model, Choosing hardware for running large language models, CPU vs GPU for AI model training and inference

- Llamafile minimum hardware requirements debated: Discussion on whether iPhone 13 or Raspberry Pi Zero W could manage running llama.cpp, clarifying the need for a 64-bit system and specific model and memory requirements.

- Llamafile v0.8.9 release boasts Android support: Latest release confirms Android support, improved Gemma2 model alignment, and progress on new embedding server.

- Fix for mxbai-embed-large-v1 model issue: Resolution for all text inputs returning the same vector by changing the input key from 'text' to 'content'.

- Choosing optimal hardware for llamafile: Enthusiasts discussing best setups for running large language models, balancing VRAM and CPU memory for effective model performance.

- Exploring CPU potential in model training: Highlighting the advantage GPUs have over CPUs in model training due to computational demand, with emphasis on limitations of CPUs in extensive training scenarios.

Research Messages and Model Evaluations

- Corey Lynch announced that Figure 1 is now implementing full end-to-end BMW use cases with manipulations learned as 200hz pixel-to-action neural networks.

- LAION research messages discussed ML model evaluation complexities, novel LLM phi-CTNL, correct solution validation for AIW+ problem, clarification on problem-solving assumptions, and introduction of a new model architecture called Terminator.

- LLM Finetuning discussions included streamlining audio processing with Chainlit Cookbook, inquiries about knowledge graphs and Lang Graph, availability of recent talk recordings, processing large datasets from Kaggle competitions, and executing Dask jobs on Modal.

- Discussions in AI Stack Devs included requests for Docker port, PR for Docker port, and a GitHub page for AI Town setup on Windows using WSL.

- Interconnects highlighted Apple securing an observer seat at OpenAI, Microsoft vs. Apple OpenAI deals, foreign gifts to U.S. officials, and discussions on the evolution of internet browsing on mobile phones.

Buttondown: The Easiest Way to Start and Grow Your Newsletter

Buttondown is a platform that simplifies the process of starting and expanding your newsletter. With Buttondown, you can easily create and manage your newsletter, making it an ideal tool for individuals looking to grow their subscriber base.

FAQ

Q: What are some recent model releases and improvements in the LLM domain?

A: Recent model releases include Gemma 2, Claude 3.5 Sonnet, Nemotron 340B, and Qwen2-72B, each showcasing different design choices and performance characteristics.

Q: What are some key design choices in the Gemma 2 models?

A: Key design choices in Gemma 2 models include sliding window attention, group-query attention, and RMS norm to develop small and efficient LLMs.

Q: How does Gemma 2 performance compare to the larger Llama 3 70B model?

A: Gemma 2 has been noted to be almost as good as the 3x larger Llama 3 70B model.

Q: What is the focus of Anthropic's Claude 3.5 Sonnet model?

A: Claude 3.5 Sonnet from Anthropic focuses on Instruction Following and Coding, ranking #1 in ScaleAI's hidden evaluations, albeit losing points on writing style and formatting.

Q: What is the significance of Nvidia's Nemotron 340B model release?

A: Nvidia released the Nemotron 340B as part of the June open model releases, adding a 340B parameter model to the landscape of LLMs.

Q: How does Qwen2-72B perform on the HuggingFace Open LLM Leaderboard?

A: Qwen2-72B excels with a score of 43.02 on average, particularly showcasing strengths in math, long-range reasoning, and general knowledge.

Q: What are some challenges associated with Retrieval Augmented Generation (RAG) techniques?

A: Challenges with RAG include issues with retrieval, managing long context windows, evaluating systems, and configuring sources for answers effectively.

Q: How can LLMs be encouraged to use context more effectively in RAG?

A: One tip shared is to transform context into pseudo function calls, as LLMs tend to benefit more from information derived from function calls than general context.

Q: What methodology does the persona-driven data synthesis propose?

A: The persona-driven data synthesis proposes generating diverse synthetic data by introducing 1 billion diverse personas to create content covering a wide range of perspectives.

Q: How effective is synthetic data in improving math capabilities in LLMs?

A: Studies have shown that synthetic data is nearly as effective as real data for enhancing math capabilities in LLMs, with LLaMA-2 7B models surpassing previous benchmarks by 14-20%.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!