[AINews] AI2 releases OLMo - the 4th open-everything LLM • ButtondownTwitterTwitter

Chapters

Discord Summaries

Perplexity AI Discord Summary

DiscoResearch Discord Summary

Mistral Office Hour and Pricing Discussion

HuggingFace Discord Highlights

HuggingFace LM Studio and LAION Discussions

Community Discussions on AI Models and Tools

Perplexity AI Top Trends and Discussions

LlamaIndex - General Messages

Different Topics in AI Enthusiasts Conversations

Discord Summaries

This section provides summaries of the key discussions and topics on various Discord servers related to AI and technology. The summaries include discussions on AI models, programming languages, GPU shortages, open-source vs. proprietary software, fine-tuning LLMs, weight freezing in models, upcoming AI models like Qwen2, training with 4-bit optimizer states, the value of N-gram models, challenges with backdoor training, and completed projects like Project Obsidian. Each Discord server discusses different aspects of AI development and research, showcasing a diverse range of insights and debates within the AI community.

Perplexity AI Discord Summary

User @tbyfly was directed to share a humorous response from Perplexity AI in another channel after their initial inquiry in the general chat. The PPLX models were discussed, with limitations of the 7b-online model highlighted. Suggestions for improving codellama 70b model consistency were made. User @bartleby0 proposed setting Perplexity AI as the default search engine and mentioned Arc Search as a competitor. New member @.sayanara shared a blog post about AI's role in mitigating misinformation, while another user noted Facebook's absence from a top apps list. Subscription issues and API credit problems post-subscription were raised. Overall, the discussions revolved around navigating model complexities, intriguing AI applications, and sharing amusing encounters with Perplexity AI.

DiscoResearch Discord Summary

Switching to Mixtral for Bigger Gains:

- jp1 confirmed the migration from a 7b model to Mixtral, inviting assistance in the transition process.

API Exposed:

- @sebastian.bodza raised concern about the API lacking security measures, posing a risk.

Nomic Outperforms OpenAI:

- Nomic's embedding model surpasses OpenAI counterparts with 8192 sequence length, available on Hugging Face.

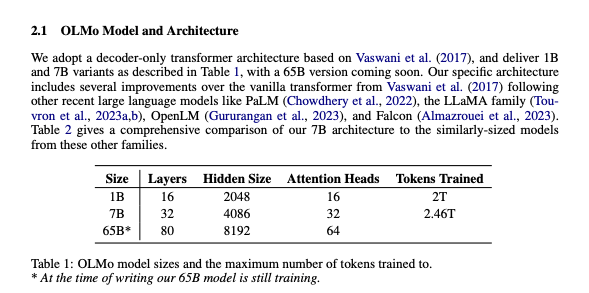

OLMo 7B Enters the Open Model Arena:

- Introduction of OLMo 7B by Allen AI, dataset, training resources, and research paper accessible.

GPU Workarounds for Inference and Shared Resources:

- Proposed inference alternatives in the absence of GPUs, hinting at group hosting if needed.

Mistral Office Hour and Pricing Discussion

In this section, the Discord conversations revolve around inquiries regarding the availability and cost of dedicated endpoints on La plateforme, estimated to be around $10k per month for custom endpoints. Discussions also touch on revenue benchmarks for custom endpoints, with suggestions to contact Mistral for precise pricing information. Participants delve into the scale of costs for enterprise deployment, anticipating substantial expenses. Additionally, there are considerations for partnership initiation based on budget estimations. The section transitions to Mistral office-hour discussions including requests for system prompt clarity, inquiries about Mixtral's parameter potential, and explanations on instruct models. Feature requests for the Mistral API and hints of future enhancements are mentioned, indicating ongoing work to address participant requests.

HuggingFace Discord Highlights

The section highlights various discussions and announcements from the HuggingFace Discord channels. It covers the release of the Ukrainian wav2vec2 bert model, links to different HuggingFace spaces and projects, discussions on video generation tools, dataset selection for academic projects, and advancements in compression algorithms and AI challenges in law. Additionally, it features the introduction of new tools such as MobileDiffusion for text-to-image generation and I-BERT for accelerated RoBERTa inference. The section also includes member interactions, presentations, and the sharing of resources like free LLM API access, UltraTextbooks dataset, and guides on integrating tools for machine learning workflow improvement.

HuggingFace LM Studio and LAION Discussions

The Hugging Face LM Studio and LAION Discord channels feature discussions on various topics related to model training and performance optimization. Users share insights on model training scripts, new features, and seek advice on model compatibility with different hardware configurations. Additionally, the LAION channel discusses advancements in the LLaMA model and gathers feedback on the GGUF format. Join the discussions to stay updated on the latest developments in AI model training and optimization.

Community Discussions on AI Models and Tools

The community engaged in various discussions on AI models and tools, including examining Autogen with multiple servers and running dual instances of LM Studio. Users shared experiences with integrating Open Interpreter (OI) with LM Studio, highlighting performance issues and costs when paired with GPT-4. The release of the OLMo paper sparked debates on evaluation scores, training code, and normalization choices. Additionally, discussions on scaling n-grams, using Infinigram, tagging backtranslations for LLMs, and evaluating synthetic data generation strategies were prominent. The community also shared insights on Gaussian Adaptive Attention library, new research papers, and upcoming projects, showcasing the dynamic landscape of AI research and development.

Perplexity AI Top Trends and Discussions

Cryptic Contemplation

User @tbyfly shared a pondering emoji, but without additional context or discussion, the intent behind the message remains a mystery.

Approval of Content

@twelsh37 seemed pleased with an unspecified content, describing it as a 'jolly decent watch,' though the specifics of the content were not provided.

Embracing Perplexity through Blogging

New community member @.sayanara shared their enthusiasm about discovering Perplexity AI and linked a blog post praising AI's potential to combat misinformation. They advocate for AI to be used responsibly, nudging people towards clarity and facts, as discussed in both their blog article and book, Pandemic of Delusion.

Surprising Top App Trends

@bartleby0 commented on the notable absence of Facebook from a list of top apps, calling it 'interesting' but did not provide a link or elaborate further on the topic.

LlamaIndex - General Messages

LlamaIndex General Messages

- @sudalairajkumar explores enhancing RAG with data science, guidance on dataset evaluation, and model selection in a detailed blog post.

- @whitefang_jr demonstrates LlamaIndex compatibility with other LLMs and provides integration guidance and an example notebook for using Llama-2.

- User @.Jayson faces Postgres connection issues; suggestions include customizing the PG vector class and considering connection pooling.

- @cheesyfishes reacts to a spam alert, taking action against harmful content.

- @ramihassanein seeks help with MongoDB pipeline configuration, and @cheesyfishes suggests updating the MongoDB vector store class.

- @princekumar13 looks for PII anonymization solutions, while @erizvi is interested in a specialized LLM for scheduling.

- @amgadoz shares insights on OpenAI's Whisper model, focusing on its architecture and functionality.

- @refik0727 asks for resources on building chatbots with AWS SageMaker, Mistral, and Llama models.

- Multiple users alert the community about LangChain AI Twitter's potential security breach.

- @andysingal explores Step-Back Prompting with LangChain for enhanced language processing capabilities.

- @markopolojarvi, @alcazarr, and @solac3 warn of a potential scam involving LangChain AI Twitter being hacked.

- User @hiranga.g inquires about compatibility of get_openai_callback() with ChatOpenAI for streaming.

- @robot3yes introduces Agent IX, an autonomous GPT-4 agent platform, on GitHub.

- @speuce shares ContextCrunch, an API for prompt compression integrating with LangChain.

- @marcelaresch_11706 highlights a job opportunity at Skipp for an OpenAI full-stack developer focusing on backend development.

- @_jp1 plans to switch from a 7b model to Mixtral due to limitations and welcomes help with the transition.

Different Topics in AI Enthusiasts Conversations

The AI enthusiasts in the Discord channels discussed various topics including the performance of Nomic Embed compared to Ada and Small OpenAI models, the transition from Weaviate to pgvector with HNSW, the experimentation with CUDA optimization for image processing, and the application of thread coarsening to increase memory efficiency. Additionally, there were discussions on deploying OSS models to AWS using vLLM, saving and reusing chain of thoughts in model prompts, and leveraging PDF documentation for GPT training. These conversations highlight the diverse interests and challenges faced by AI enthusiasts in different areas of research and development.

FAQ

Q: What is the key focus of the Discord conversations described in the essai?

A: The Discord conversations focus on various aspects of AI development and research, including discussions on AI models, programming languages, GPU shortages, open-source vs. proprietary software, fine-tuning LLMs, weight freezing in models, upcoming AI models like Qwen2, training with 4-bit optimizer states, the value of N-gram models, challenges with backdoor training, and completed projects like Project Obsidian.

Q: What are some of the highlighted topics from the HuggingFace Discord channels?

A: Highlighted topics from the HuggingFace Discord channels include the release of the Ukrainian wav2vec2 bert model, discussions on video generation tools, dataset selection for academic projects, advancements in compression algorithms, AI challenges in law, introduction of new tools like MobileDiffusion for text-to-image generation and I-BERT for accelerated RoBERTa inference, interactions among members, presentations, and sharing of resources like free LLM API access and guides for machine learning workflow improvement.

Q: What are some of the discussions in the LlamaIndex General Messages section of the essai?

A: Discussions in the LlamaIndex General Messages section include enhancing RAG with data science, guidance on dataset evaluation and model selection, demonstrating LlamaIndex compatibility with other LLMs, facing database connection issues, exploring PII anonymization solutions, building chatbots with AWS SageMaker, Mistral, and Llama models, alerting the community about security breaches, introducing new AI platforms like Agent IX and ContextCrunch, and sharing job opportunities.

Q: What are some of the technical topics discussed by AI enthusiasts in the Discord channels?

A: Technical topics discussed include the performance of Nomic Embed compared to Ada and Small OpenAI models, transitioning from Weaviate to pgvector with HNSW, CUDA optimization for image processing, thread coarsening for memory efficiency, deploying OSS models to AWS using vLLM, saving and reusing thought chains in model prompts, and utilizing PDF documentation for GPT training.

Q: What concerns were raised regarding API security in the essai?

A: Concerns were raised about the API lacking security measures, posing a risk, which prompted discussions on the importance of implementing adequate security measures to protect the API resources and data from potential vulnerabilities and breaches.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!